The child safety problem on platforms is worse than we knew

A startling new report finds far more young kids using platforms than we suspected — and they’re having sexual interactions with adults in huge numbers

Millions of young children are using platforms years before they turn 13 — and their presence could put many of them in danger. An alarming new study shared exclusively with Platformer has found that minors in the US often receive abuse, harassment, or sexual solicitation from adults on tech platforms. For the most part, though, children say they are not informing parents or other trusted adults about these interactions — and are instead turning for support for tech platforms, whose limited blocking and reporting tools have failed to address the threats they face.

The report from Thorn, a nonprofit organization that builds technology to defend children from sexual abuse, identifies a disturbing gap in efforts by Snap, Facebook, YouTube, TikTok, and others to keep children safe. Officially, children are not supposed to use most apps before they turn 13 without adult supervision. In practice, though, the majority of American children are using apps anyway. And even when they block and report bullies and predators, the majority of children say that they are quickly re-contacted by the same bad actors — either via new accounts or separate social platforms.

Among the report’s key findings:

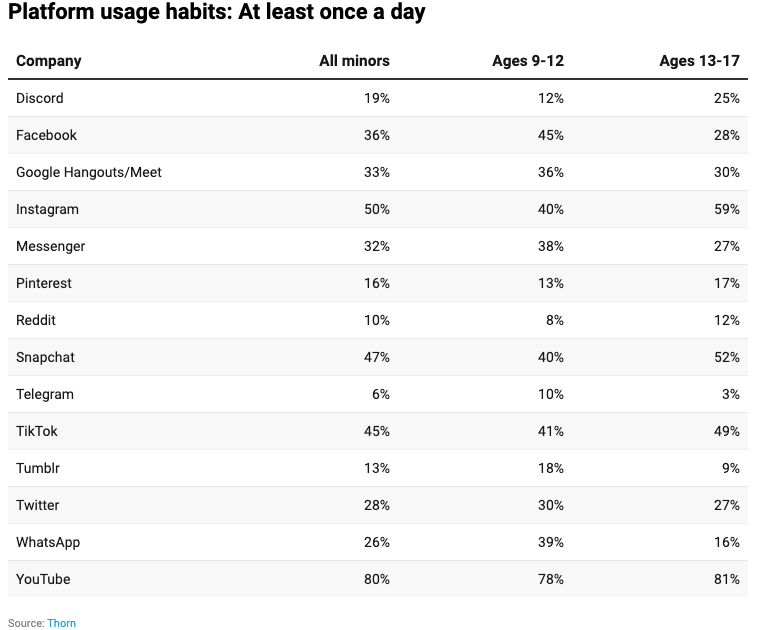

- Children are using major platforms in large numbers long before they turn 13: 45 percent of children 9-12 say they use Facebook daily; 40 percent use Instagram; 40 percent use Snapchat; 41 percent use TikTok; and 78 percent use YouTube.

- Children report having online sexual interactions at high rates — both with their peers and people they believe to adults: 25 percent of kids 9-17 reported having had a sexually explicit interaction with someone they thought was 18 or older, compared to 23 percent of participants that had a similar experience with someone they believed to be a minor.

- Children are more than twice as likely to use platform blocking and reporting tools than they are to tell parents and other caregivers about what happened: 83 percent of 9- to 17-year-olds who reported having an online sexual interaction reacted with reporting, blocking, or muting the offender, while only 37 percent said they told a parent, trusted adult, or peer.

- The majority of children who block or report other users say those same users quickly find them again online: More than half of children who blocked someone said they were contacted again by the same person again, either through a new account or a different platform. This was true both for people children knew in real life (54 percent) and people they had only met online (51 percent).

- Children who identify as LGBTQ+ experience all of these harms at higher rates than their non-LGBTQ+ peers: 57 percent of youth who identify as LGBTQ+ said they have had potentially harmful experiences online, compared to 46 percent of non-LGBTQ+ youth. They also had online sexual interactions at much higher rates than their peers.

Thorn’s research comes at a time when regulators are examining platforms’ child safety efforts with increasing scrutiny. In a March hearing, US lawmakers criticized Facebook and Google for the potential effect their apps could have on children. And this week, 44 attorneys general wrote a letter to Facebook CEO Mark Zuckerberg urging him to abandon a plan to create a version of Instagram for children.

The Thorn report could trigger heightened scrutiny of the way young children use platforms, and the relatively few protections that tech companies have currently put in place to protect them or offer them support. It could also push the industry to collaborate on cross-platform solutions between Facebook, Google, Snap and others that Thorn says are needed to fully address the threats children face.

Taken together, the findings suggest that platforms, parents, and governments need to work harder to understand how children are using technology from the time they are in elementary school, and develop new solutions to protect them as they explore online spaces and express themselves.

“Platforms have to be better at designing experiences,” said Julie Cordua, Thorn’s CEO, in an interview. “Adults need to create safe spaces for kids to have conversations. …. And then on the government and policy side, lawmakers need to understand kids' online experiences at this level of detail. You’ve got to dive into the details and understand the experiences of youth.”

Data on these subjects is difficult to collect for several reasons, many of them obvious: the topics involved are extremely sensitive, and much of the best information is held within the private companies running the platform. To do its work, Thorn had 1,000 children ages 9 to 17 fill out a 20-minute online survey with their parents’ consent. The survey was conducted from October 25 to November 11, and the margin of sampling error is +/- 3.1 percent.

The findings of the survey tell multiple stories about the current relationship between children and technology. The most pressing one may be that because young children are not supposed to use platforms, platforms often do not build safety tools with them in mind. The result is a generation of children, some of them still in elementary school, attempting to navigate blocking and reporting tools that were designed for older teens and adults.

“Kids see platforms as a first line of engagement,” Cordua said. “We need to lean into what kids are doing, and beef up the support mechanisms so they’re not putting the onus on the child. Put the onus on the platform that’s building the experience and make it safe for all.”

An obvious thing platforms can do is to rewrite the language they employ for user reports to more accurately reflect the harms taking place there. Thorn found that 22 percent of minors who attempted to report that their nudes had been leaked didn’t feel like platform reporting systems permitted them to report it. In Silicon Valley terms, it’s a user experience problem.

“While minors say they are confident in their ability to use platform reporting tools to address their concerns, when given a series of commonly available options from reporting menus, many indicated that none of the options fit the situation,” the Thorn report says. “Nearly a quarter said they ‘don’t feel like any of these choices fit the situation’ of being solicited for (self-generated nudes) by someone they believe to be an adult (23 percent) or someone they believe to be under 18 (24 percent).”

Thorn has other ideas for what platforms in particular should do here. For example, they could invest more heavily in age verification. Even if you accept that kids will always find ways into online spaces meant for adults, the sheer numbers can be surprising. Thorn found that 27 percent of 9- to 12-year-old boys have used a dating app; most dating apps require users to be at least 18.

Platforms could integrate crisis support phone numbers into messaging apps to help kids find resources when they have experienced abuse. They could share block lists with each other to help identify predators, though this would raise privacy and civil rights concerns. And they could invest more heavily in ban evasion so that predators and bullies can’t easily create alternate accounts after they are blocked.

In their current state, Cordua told me, platform reporting tools can feel like fire alarms that have had their wires cut.

“The good thing is, kids want to use these tools,” she said. “Now we need to make sure they work. This is an opportunity for us to ensure that if they pull that fire alarm, people respond.”

Too often, though, no one does. One in three minors who have reported an issue said the platform took more than a week to respond, and 22 percent said they had made a report that was never resolved. “This is a critical window during which the child remains vulnerable to continued victimization,” the report authors say.

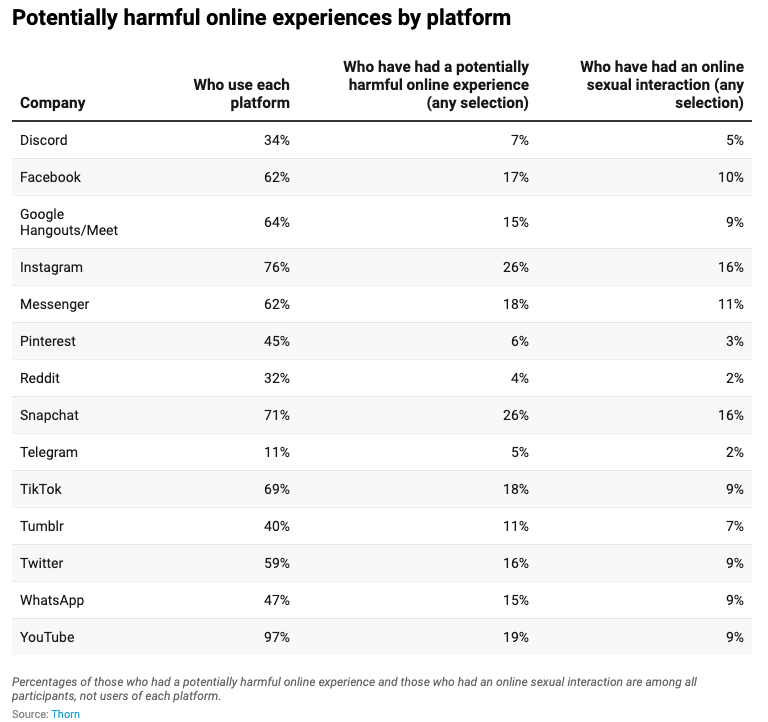

The Thorn report found children on every platform where it looked. But some stood out for the frequency with which children reported experiencing harm. The platforms with the highest number of minors reported potential harm were Snapchat (26 percent), Instagram (26 percent), YouTube (19 percent), TikTok (18 percent), and Messenger (18 percent). The platforms where the most minors said they had an online sexual interaction were Snapchat (16 percent), Instagram (16 percent), Messenger (11 percent), and Facebook (10 percent).

I shared Thorn’s findings with these platforms and asked them to respond. Here’s what they told me.

- Snap: “We really appreciate the extensive findings and related recommendations in Thorn’s research. The prevalence of unwanted sexual contact is horrific, and this study will help inform our ongoing efforts to combat these behaviors on Snapchat. In recent months, we have been increasing our in-app education and support tools for Snapchatters, working to revamp our in-app reporting tools, putting in place additional protections for minors, and expanding resources for parents. After reviewing this research, we are making additional changes to make us be even more responsive to the issues raised by the report.”

- Facebook and Instagram: “We appreciate Thorn’s research and value our collaboration with them. We’ve made meaningful progress on these issues, including restricting Direct Messages between teens and adults they don't follow on Instagram, helping teens avoid unwanted chats with adults, making it harder for adults to search for teens, improving reporting features, and updating our child safety policies to include more violating content for removal. However, Thorn’s research, while good, stopped far short of the global view on this issue when they excluded Apple’s iMessage, which is used heavily by teens, is preloaded on every iPhone, and is bigger than Messenger and Instagram Direct combined.” (Thorn responded to the swipe at Apple by saying it focused on social platforms because that is where many of these inappropriate relationships begin — typically they migrate to messaging apps from elsewhere.)

- YouTube: “YouTube doesn’t allow content that endangers the emotional and physical well-being of minors and we have strict harassment policies prohibiting cyberbullying or content that threatens individuals in any way. Additionally, because YouTube has never been for people under 13, we created YouTube Kids in 2015 and recently announced a supervised account option for parents who have decided their tweens or teens are ready to explore YouTube.”

- TikTok: “Protecting minors is vitally important, and TikTok has taken industry-leading steps to promote a safe and age-appropriate experience for teens. These include setting accounts ages 13-15 to private by default, restricting direct messaging for younger teens, and committing to publish information regarding removals of suspected underage accounts in our Transparency Reports."

While platforms have implemented a variety of partial solutions to the issue of underage access and the harms that can follow, there remains a lack of industry-wide standards or collaboration on solutions. Meanwhile, parents and lawmakers seem largely ignorant of the challenges.

For too long, tech companies have been able to set aside problems related to the presence of children on their platforms simply by saying that kids are not allowed there, and that the companies work hard to remove them. The Thorn report illustrates the degree to which harms remain on the platform in spite of, and even partially because of, this stance. To truly protect children, they will need to take decisive and more coordinated action.

“Let’s deal with the reality that kids are in these spaces, and re-create it as a safe space,” Cordua said. “When you build for the weakest link, or you build for the most vulnerable, you improve what you’re building for every single person.”

The Ratio

Today in news that could affect public perception of the big tech companies.

⬇️ Trending down: Microsoft-owned LinkedIn has been accused of censorship after the account of a prominent critic of China was frozen and his posts were removed. “The comments … called the Chinese government a ‘repressive dictatorship’ and criticized the country’s state media organizations as ‘propaganda mouthpieces.’” (Ryan Gallagher / Bloomberg)

Governing

⭐ Instagram and Twitter blamed the improper removal of posts about the conflict in Palestine on “glitches.” Social media has lit up over the past few days with complaints about various removals and account suspensions. Here’s Maya Gebeily with Thomson Reuters Foundation:

Instagram said in a statement that an automated update last week caused content re-shared by multiple users to appear as missing, affecting posts on Sheikh Jarrah, Colombia, and U.S. and Canadian indigenous communities.

“We are so sorry this happened. Especially to those in Colombia, East Jerusalem, and Indigenous communities who felt this was an intentional suppression of their voices and their stories – that was not our intent whatsoever,” Instagram said.

Related: Instagram posts about the Al-Aqsa Mosque, the third-holiest site in Islam, were mistakenly removed after being linked to terrorism. (Ryan Mac / BuzzFeed)

A Facebook contractor working as a content moderator in Ireland testified that the company should set hard limits on the amount of disturbing content that reviewers see. The fact that the company doesn’t even seem to be exploring this issue is an ongoing scandal. (The Journal)

An Associated Press investigation “found that China’s rise on Twitter has been powered by an army of fake accounts that have retweeted Chinese diplomats and state media tens of thousands of times.” The accounts typically do not disclose that the tweets are sponsored by the government. (Erika Kinetz / AP)

The US Census began using differential privacy techniques to prevent individual citizens from being identified, but now activist groups say those techniques have made the data unreliable. A group of 17 states is now suing to stop the practice. (Todd Feathers / The Markup)

Fast-growing video platform Rumble recommendations promote misinformation and conspiracy theories about vaccines three times as often as it does accurate information. The site has grown from 5 million monthly visitors in September to 81 million last month. (Ellie House, Alice Wright, and Isabelle Stanley / Wired)

The United Kingdom unveiled a law to fine social media firms that fail to remove online abuse. As described here, the law is extremely confusing — banning both “hate speech” and “discriminating against particular political viewpoints,” while also requiring companies “to safeguard freedom of expression.” (Michael Holden / Reuters)

A Republican lawmaker in Michigan introduced a bill to register and fine fact checkers. “Fact checkers found to be in violation of the registry requirements could be fined $1,000 per day of violation.” (Beth LeBlanc and Craig Mauger / Detroit News)

Amazon saved $300 million in taxes after a European Union court annulled a 2017 decision from the European Commission, the EU’s top antitrust authority. “The ruling is a significant blow to Margrethe Vestager, an executive vice president of the commission who is leading a campaign to curb alleged excesses by some of the world’s largest tech companies.” (Sam Schechner / Wall Street Journal)

Amazon sued a group of unnamed individuals for allegedly organizing text message-based scams purporting to come from the company. “The scam messages made fake offers in hopes of getting targets to click on links that take them to specific advertisers and websites.” (Laura Hautala

/ CNET)

A look at the steep challenges facing fact-checkers in Modi’s India, where the government continuously uses social media accounts to spread misinformation. Another great story from Rest of World, my favorite new publication of recent years, which celebrates its first birthday today. (Soina Faleiro / Rest of World)

Related: India’s epidemic of false COVID-19 information. “As patients and families frantically seek treatment, elected officials—and some physicians—have fuelled denialism and specious talk of miracle cures.” (Rahul Bhatia / New Yorker)

Mauritius, an island nation of 1.3 million off the coast of East Africa, has proposed a law that “would be able to decrypt, re-encrypt, and archive all social media traffic in Mauritius.” Will social networks stand for this? Among other affronts, the law would also label any online criticism of the government as “abuse and misuse.” (Ariel Saramandi / Rest of World)

Industry

⭐ Snap suspended two anonymous messaging apps amid a cyberbullying lawsuit filed after a teenager committed suicide. The apps are Yolo and LMK, which integrated into Snapchat using a software developer kit. I find this particularly frustrating given that I wrote about how anonymous messaging apps often lead to teen suicide as far back as 2013.

Apple employees circulated a petition demanding an investigation into the hiring of ad tech executive Antonio García Martínez. Martínez, a former Facebook product manager and author of the book Chaos Monkeys, has been criticized for making statements employees described as racist and sexist. It’s a rare public display of worker organizing at the company. (Zoe Schiffer, Casey Newton, and Elizabeth Lopatto / The Verge)

Five takeaways about Ximalaya, China’s largest audio platform. If you’ve started to doubt what opportunity is for Spotify — or Clubhouse — read about what Ximalaya is doing. (Tanay Jaipuria)

Twitter has scooped up several employees over the past two years known for their work on diversity, equity, and inclusion. Most recently it poached Lara Mendonça, former head of product design at Bumble, to lead design efforts around “meaningful conversation.” (Anna Kramer / Protocol)

Coinbase announced it would no longer let new hires negotiate salaries, as part of its ongoing effort to reduce the amount of employee opinions in the workplace. Looking forward to seeing what’s next on the company’s my-way-or-the-highway roadmap. (LJ Brock / Coinbase)

You can now sell NFTs on eBay. But the payments are made in US dollars rather than cryptocurrencies. (MK Manoylov / The Block)

Those good tweets

Just had a nice phone call with my dad (emotionally distanced of course)

— fav nathans (@FavNathans) 3:51 PM ∙ May 11, 2021

Hi I’m in Australia and saw a specialist this morning, gave them my Medicare card and by 9pm the rebate showed up in my bank account oooh socialized medicine is terrifying I have no freedom please help me

— Ben Lee (@benleemusic) 9:51 PM ∙ May 10, 2021

I think there’s been an accident

— Joe Russell (@Joebob) 5:30 PM ∙ May 6, 2021

shoutout my door dash driver on his grind fr

— joshua🐆 (@jjjjjjjjjjjosh) 4:55 PM ∙ May 2, 2021

Talk to me

Send me tips, comments, questions, and age verification schemes: casey@platformer.news.