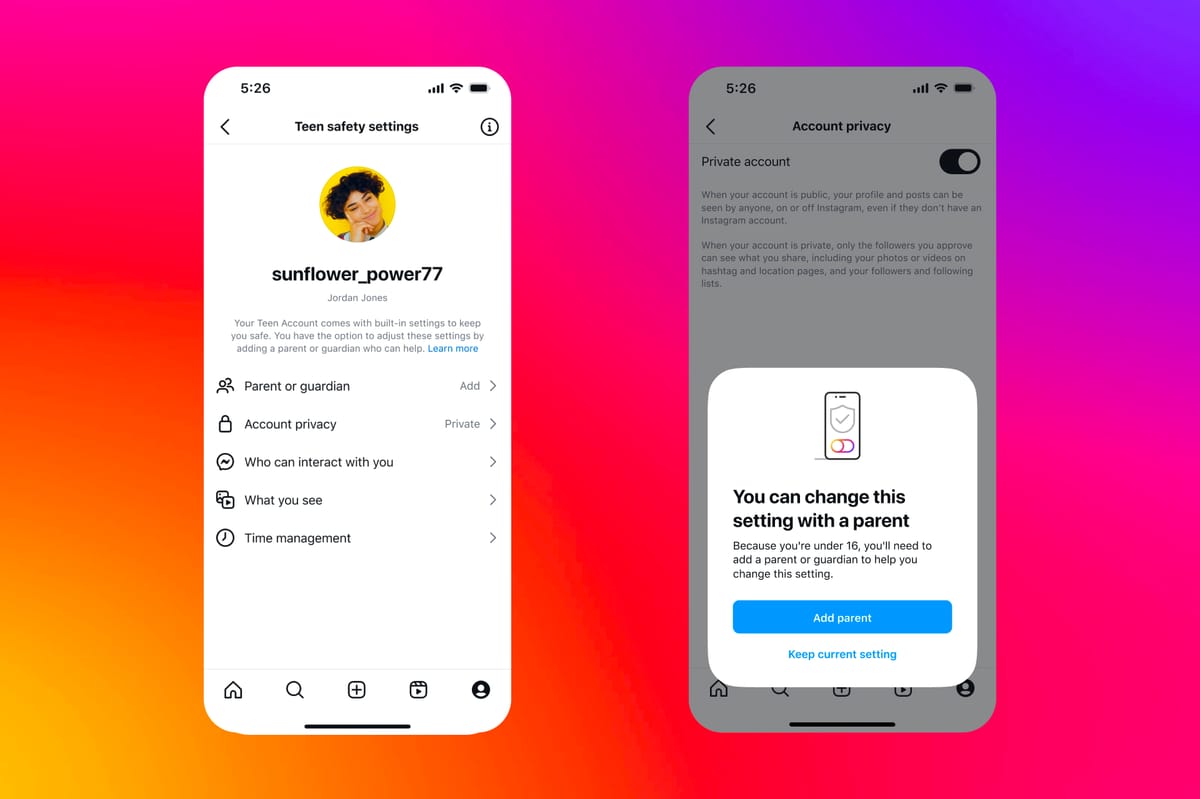

Instagram makes teen accounts private by default

Under siege by state lawmakers, Meta makes its biggest move yet to address harms to minors. Will it be enough?

Programming note: The first edition of the week is landing on Tuesday morning to accommodate some news. A second edition will go out to paid members this afternoon covering the Snap Partner Summit in Los Angeles, which I am attending.

I.

Instagram announced a sweeping overhaul of its privacy and safety features for teenagers on Tuesday, making accounts for tens of millions of users under 16 private by default and requiring that they get parental permission to change many settings. Younger users will also be opted into the most restrictive settings for messaging and content recommendations, and will start receiving a prompt encouraging them to close the app after using Instagram for an hour each day.

The full set of changes, which come amid mounting global concern that social media is detrimental to the mental health and safety of young people, represent the most significant voluntary changes an American social media company has made to limit teens’ access to an app. But Meta stopped short of some of the changes that lawmakers around the United States have called for, including requiring parental permission to create accounts and placing more limitations around recommendation algorithms.

For that reason, it’s unclear to what degree Instagram’s new experience for teenagers will satisfy regulators — or begin to calm the broader societal backlash against social products used by young people. But Meta’s move will likely create significant new pressure on TikTok, Snapchat, and other social apps popular among young people to adopt similar measures.

“Our north star is really listening to parents,” said Naomi Gleit, head of product at Meta, in an interview with Platformer. “They're concerned about three areas: One is who can contact their kids. Two is what content their kids are seeing. And three is how much time they're spending online. And so teen accounts are designed to address those three concerns.”

Teenagers who create new accounts starting today will be opted in to the new experience. It will come to teens with existing accounts in the United States, Canada, United Kingdom, and Australia in 60 days, and is expected to roll out globally beginning in January.

The changes include:

- Placing all users who are under 16 into private accounts. Teens will have to accept new followers in order to interact with them or make their content visible. (Users who are 16 or older will not have their accounts made private automatically by Meta.)

- Creating new parental supervision tools that let parents and guardians manage their children’s Instagram settings and see who their teens are messaging. (Parents cannot view the contents of those messages.)

- Making it so that by default, teens can only be messaged by people that they follow or have already messaged. They also will not be able to be tagged or mentioned by accounts that they do not follow.

- Placing teens into more restrictive content settings, limiting the types of recommendations they get from accounts they do not follow in Reels or Instagram’s explore page. Meta will also filter offensive words and phrases from their comments and direct messages.

- Showing teens a new “daily limit” prompt after they have used Instagram for 60 minutes.

- Introducing “sleep mode,” which will automatically turn off Instagram notifications for teens between 10PM and 7AM.

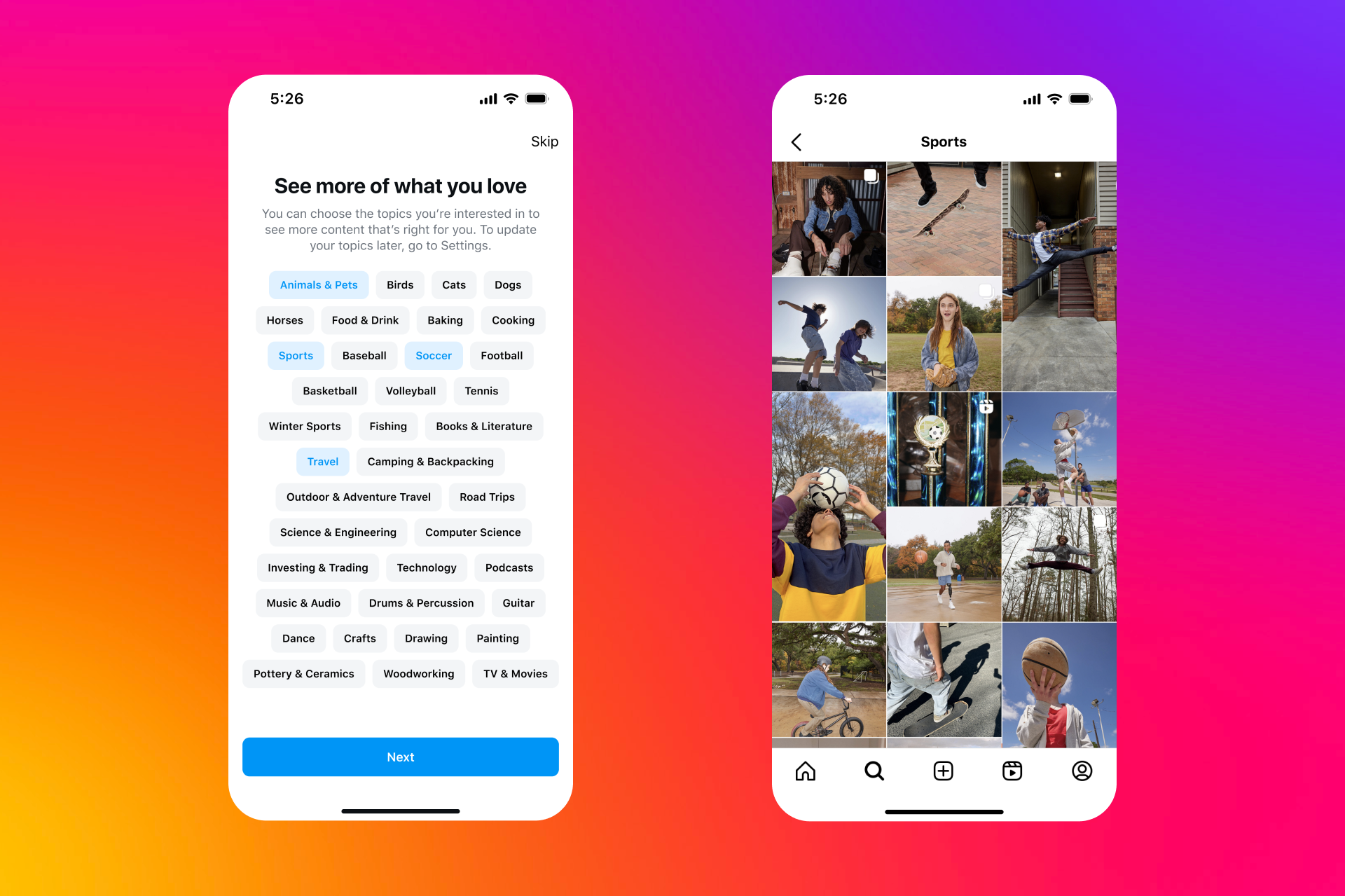

- Asking teens to choose from more than 30 safe topics they are interested in so that Instagram can show them more of those on Reels and in in-feed recommendations. (“They can basically go to Explore and see a whole page of cats, if they want to,” Gleit said.)

Meta also said it is developing new age verification requirements and building new automated systems to find underage accounts and place them in more restrictive settings.

To change those settings, teens will have to designate a parent or guardian’s Instagram account and allow an adult to supervise their settings. In practice, Meta will have no idea whether the adult account linked to a teen’s is actually their parent or guardian. And it fully expects some teens to try to circumvent the requirement.

“Teens are very creative,” Gleit said. “So they're obviously going to find workarounds.”

Still, she said, the company is prepared for the more obvious hacks, and plans to take various steps to prevent evasion.

II.

The new teen experience comes just under a year after 41 states and the District of Columbia sued Meta, alleging that Instagram is addictive and harmful — itself the product of a two-year investigation. The investigation began after revelations from documents leaked by Meta whistleblower Frances Haugen, particularly some internal research showing that a minority of teenage girls reported a negative effect on their mental health after using Instagram.

Last week, 42 attorneys general endorsed a proposal from US Surgeon General Vivek Murthy to add a warning label to social media.

The question now is whether the changes announced today will stave off more drastic action from regulators around the world. In the United States, Congress has failed to pass a single law that would place new child safety requirements on platforms. (The Kids Online Safety Act, which passed the Senate in July, has stalled in the House of Representatives.) A growing number of states have passed digital child safety laws of their own, but for the most part they have been blocked from being implemented on First Amendment Grounds.

When Meta was sued last year, the company publicly pointed to 30 features it had introduced in an effort to make their apps safer for young people. Privately, executives complained that their company has been caught up in a moral panic. Meta CEO Mark Zuckerberg seemed to hint at this last week during a taping of the “Acquired” podcast when he referred to “allegations about the impact of the tech industry or our company which are just not founded in any fact” — and said that the company would do less apologizing going forward.

The changes announced today have been under development for many months. But it’s hard not to read them as a tacit admission that on many key dimensions, Meta’s critics have been right. Letting teenagers create and manage their own accounts has left them vulnerable to bullies and predators, and filling up their feeds with whatever an automated system predicts they will engage with has in many cases been detrimental to the health of their users.

These concerns have been raised internally for years, as Haugen’s leaked documents revealed. But Meta dismissed some of that internal research by saying that the sample size was small, and that it had been taken out of context.

“It is simply not accurate that this research demonstrates Instagram is ‘toxic’ for teen girls,” Pratiti Raychoudhury, then Meta’s head of research, wrote in 2021. “The research actually demonstrated that many teens we heard from feel that using Instagram helps them when they are struggling with the kinds of hard moments and issues teenagers have always faced.”

Of course, with more than 1 billion users on Instagram, both things are true. “Many teens” use Instagram to find community and for positive self-expression — and many other teens get bullied, or fall victim to grooming or sextortion, or experience other harms. The question has always been to what extent Meta itself would address those harms of its own accord, and to what extent it would have to be dragged into doing so by regulators.

Gleit played down the idea that the changes seemed to validate long-standing complaints about child safety on Instagram.

“I would say that in areas like this, there's always more that we can do to give teens and parents more of what they want and less of what they don't want,” she said. “It really is just sort of an evolution of the protections that we've had.”

At the same time, Gleit told me the company believes that the changes will meaningfully reduce usage of the app among teenaged users.

“I think that teens might use Instagram less in the short term,” she said. “I think they might use Instagram differently in the short term. But I think that this will be best for teens and parents over the long term. And that's what we're focused on.”

Correction: This post originally said Meta is also developing new technologies to identify and remove users who are 13 and under. Today's changes only apply to 13-17-year-olds.

Governing

- TikTok’s appeal case against the divest-or-ban law started Monday in court. Here’s a timeline of events and what exactly they’re litigating. (Gaby Del Valle and Lauren Feiner / The Verge)

- A panel of federal judges appeared somewhat skeptical of TikTok’s argument that Congress lacks the authority to pass the law and that it was being unfairly singled out. (Sapna Maheshwari and David McCabe / New York Times)

- ByteDance’s newer social app Lemon8, which some creators have turned to as a backup plan, surged to the top 10 in the App Store. (Sarah Perez / TechCrunch)

- Elon Musk was widely and justly criticized after posting “and no one is even trying to assassinate Biden/Kamala” on X following a second apparent Trump assassination attempt. He deleted the post and claimed it had been a joke. But still, a new low for a man who is still somehow a defense contractor. (Flynn Nicholls / Newsweek)

- Musk called the Australian government “fascists” for a proposed law that would fine tech companies up to 5 percent of their global revenue for enabling misinformation. (Renju Jose / Reuters)

- The Brazilian government withdrew $3.3 million from X and Starlink’s bank account to pay for Supreme Court-imposed fines. (Daniel Carvalho / Bloomberg)

- X will reportedly not be regulated under the Digital Markets Act because it doesn’t meet certain revenue thresholds. (Samuel Stolton / Bloomberg)

- A look at Musk’s growing security detail (Kirsten Grind and Jack Ewing / New York Times)

- Musk put in hundreds of thousands of dollars into a local race in Texas in an unsuccessful attempt to unseat a prosecutor backed by Democratic billionaire donor George Soros, this investigation found. (Joe Palazzolo and Dana Mattioli / Wall Street Journal)

- Meta banned RT, Russia's leading state media outlet, days after US authorities accused it of acting as an extension of Russia's spy agencies. Your move, YouTube. (Kevin Collier and Phil Helsel / NBC)

- Meta entered into a deal with Google in 2018 after determining that it couldn’t successfully compete for ads sold on websites, a former Facebook executive testified in the DOJ antitrust trial against Google. (Leah Nylen / Bloomberg)

- Google’s 20 percent commission for ad revenue, which executives have worried about internally, is a sign of the company’s monopoly over ads, the Justice Department argues. 20 percent might sound high, but don't worry — the cost gets passed on to you as a consumer. (Lauren Feiner / The Verge)

- The Springfield woman behind one of the first Facebook posts spreading the baseless claim about Haitian immigrants eating pets says she didn’t mean for the post to spread so widely. (Alicia Victoria Lozano / NBC News)

- AI chatbots may help reduce the strength of a person’s belief in a conspiracy theory, a new study suggested. (Jennifer Ouellette / Ars Technica)

- A look at how the Harris campaign’s TikTok team spun last week’s debate performances to create viral videos. (Drew Harwell / Washington Post)

- Trump Media shares jumped by more than 25 percent after Donald Trump said he is not selling his shares. (Kevin Breuninger and Dan Mangan / CNBC)

- A look at California’s divisive AI bill, which governor Gavin Newsom has until the end of this month to decide on. (George Hammond and Cristina Criddle / Financial Times)

- Google, Amazon, Microsoft and Meta are fighting a proposal in Ohio that would increase the upfront energy costs the companies would have to pay for data centers. (Caroline O’Donovan / Washington Post)

- The FDA approved the sleep apnea detection feature for Apple Watch Series 9, Series 10, and Watch Ultra 2. (Brian Heater / TechCrunch)

- Apple asked a court to drop its lawsuit against spyware firm NSO Group, saying that Israeli officials’ apparent seizure of critical files from the firm’s headquarters could undermine the suit’s potential. (Joseph Menn / Washington Post)

- Meta says it’s restarting efforts to train AI on public Facebook and Instagram posts in the UK, saying it “incorporated regulatory feedback” for an opt-out feature. (Paul Sawers / TechCrunch)

- Foreign minister Stephane Sejourne was picked by France as its new candidate for the EU Commission after former commissioner Thierry Breton quit. Would love to know the back story on Breton leaving. (Michel Rose and Foo Yun Chee / Reuters)

- Amazon and Walmart’s Flipkart reportedly violated Indian antitrust laws by giving certain sellers priority. (Aditya Kalra / Reuters)

Industry

- CEO Sam Altman reportedly told OpenAI employees that the company’s structure will move away from being controlled by a non-profit this year. (Kali Hays / Fortune)

- OpenAI’s $150 billion valuation is reportedly contingent upon whether it can upend its corporate structure and remove a profit cap for investors. (Krystal Hu and Kenrick Cai / Reuters)

- Altman is leaving the board’s safety and security committee, which will now consist of independent members. (Ina Fried / Axios)

- ByteDance is reportedly planning to mass produce two AI chips of its own design by 2026. (Wayne Ma and Qianer Liu / The Information)

- While Microsoft has been touting AI solutions to climate change, it has been marketing its technology to fossil fuel companies including ExxonMobil and Chevron, this investigation reveals. (Karen Hao / The Atlantic)

- Microsoft announced its Copilot Pages feature, which lets users use Copilot chatbot’s responses in a collaborative setting. (Tom Warren / The Verge)

- Microsoft inked a deal with Vodafone for the telecom company to use AI assistants for Office, along with a suite of new AI tools that can help workers create Excel charts and collaborate. (Dina Bass and Matt Day / Bloomberg)

- United Airlines will use Starlink’s internet service for in-flight Wi-Fi, the company said. (Micah Maidenberg and Alison Sider / Wall Street Journal)

- Google introduced DataGemma, a pair of open-sourced models aimed at addressing LLM hallucinations around statistics queries. (Shubham Sharma / VentureBeat)

- Apple and Google’s announcements show that AI is best used to upgrade already existing technology, this columnist writes. (Christopher Mims / Wall Street Journal)

- Third-party app stores will be allowed on iPads in the EU in the next major release of iPadOS. (Romain Dillet / TechCrunch)

- Apple’s Activation Lock for iPhone components, its latest theft-prevention measure, could help deter stolen iPhones from being disassembled into parts. (Ben Lovejoy / 9to5Mac)

- The iPhone 16 series’ pre-order sales are down about 12 percent from last year, with lower than expected demand for the iPhone 16 Pro line. (Ming-Chi Kuo / Medium)

- Despite Apple Intelligence being slow to arrive, iOS 18 is a good upgrade, this reviewer writes. (Allison Johnson / The Verge)

- Amazon will require workers to return to the office five days a week starting next year. (Jay Peters / The Verge)

- Spotify is introducing in-app parental controls that allow parents to use “managed accounts” for users under 13 to prevent them from viewing or listening to content deemed explicit. (Sarah Perez / TechCrunch)

- Slack now lets users add AI agents from Asana, Cohere, Workday and others. (Emilia David / VentureBeat)

- Renowned AI computer scientist Fei-Fei Li unveiled a new startup, World Labs, that aims to teach AI about the physical world and to help build it. (Steven Levy / Wired)

- Online dating may be a factor in the increased income equality gap in the US recently, a new study suggested. (Alexandre Tanzi / Bloomberg)

Those good posts

For more good posts every day, follow Casey’s Instagram stories.

(Link)

(Link)

(Link)

Talk to us

Send us tips, comments, questions, and teen safety tips: casey@platformer.news.