How you want me to cover artificial intelligence

Seven principles for journalism in the age of AI

Programming note: Barring major news, Platformer will be off Thursday as I attend an on-site meeting of the New York Times’ audio teams in New York. Hard Fork will publish as usual on Friday.

Last week, I told you all about some of the difficulties I’ve been having in covering this moment in artificial intelligence. Perspectives on the subject vary so widely, even among experts, that I often have trouble trying to ground my understanding of which risks seem worth writing about and which are just hype. At the same time, I’ve been struck by how many executives and researchers in the space are calling for an industrywide slowdown. The people in this camp deeply believe that the risk of a major societal disruption, or even a deadly outcome, is high enough that the tech industry should essentially drop everything and address it.

There are some hopeful signs that the US government, typically late to any party related to the regulation of the tech industry, will indeed meet the moment. Today OpenAI CEO Sam Altman appeared before Congress for the first time, telling lawmakers that large language models are in urgent need of regulation.

Here’s Cecilia Kang in the New York Times:

Mr. Altman said his company’s technology may destroy some jobs but also create new ones, and that it will be important for “government to figure out how we want to mitigate that.” He proposed the creation of an agency that issues licenses for the creation of large-scale A.I. models, safety regulations and tests that A.I. models must pass before being released to the public.

“We believe that the benefits of the tools we have deployed so far vastly outweigh the risks, but ensuring their safety is vital to our work,” Mr. Altman said.

Altman got a fairly friendly reception from lawmakers, but it’s clear they’ll be giving heightened scrutiny to the industry’s next moves. And as AI becomes topic one at most of the companies we cover here, I’m committed to keeping a close eye on it myself.

One of my favorite parts of writing a newsletter is the way it can serve as a dialogue between writer and readers. Last week, I asked you what you want to see out of AI coverage. And you responded wonderfully, sending dozens of messages to my email inbox, Substack comments, Discord server, Twitter DMs, and replies on Bluesky and Mastodon. I’ve spent the past few days going through them and organizing them into a set of principles for AI coverage in the future.

These are largely not new ideas, and plenty of reporters, researchers and tech workers have been hewing much more closely to them than I have. But I found it useful to have all this in one place, and I hope you do too.

Thanks to everyone who wrote in with ideas, and feel free to keep the conversation going in your messaging app of choice.

And so, without further ado: here’s how you told me to cover AI.

Be rigorous with your definitions. There’s an old joke that says “AI” is what we call anything a computer can’t do yet. (Or perhaps has only just started doing.) But the tendency to collapse large language models, text-to-image generators, autonomous vehicles and other tools into “AI” can lead to muddy, confusing discussions.

“Each one of those has different properties, risks and benefits associated with them,” commenter Matthew Varley wrote. “And even then, treating classes of AI & [machine learning] tech super broadly hurts the conversation around specific usage. The tech that does things like background noise suppression on a video call is the same exact tech that enables deepfake voices. One is so benign as to be almost invisible (it has been present in calling applications for years!), the other is a major societal issue.”

The lesson is to be specific when discussing various different technologies. If I’m writing about a large language model, I’ll make sure I say that — and will try not to conflate it with other forms of machine learning.

Predict less, explain more. One of my chief anxieties in covering AI is that I will wind up getting the actual risks posed totally wrong: that I’ll either way overhype what turns out to be a fairly manageable set of policy challenges, or that I’ll significantly underrate the possibility of huge social change.

Anyway, I am grateful to those of you who told me to knock it off and just tell you what’s happening.

“I don’t really get your intense concern with ‘getting it right’ (retrospectively),” a reader who asked to stay anonymous wrote. “That’s not what I look to journalism for. … If you surface interesting people/facts/stories/angles and seed the conversation, great. Put another way, if the experts in the field disagree, why would I expect a tech journalists to adjudicate? It’s just not where you add value.”

Similarly, reader Sahil Shah told me to focus on actual daily developments in the field over speculation about the future.

“I strongly feel that the people who focus on immediate applications of a technology will have greater influence compared to those who focus on existential threats or ways to wield the fear or positivity of the technology to achieve their personal, professional and political aims,” he said.

Point taken. Look for fewer anxious predictions in this space, and more writing about what’s actually happening today.

Don’t hype things up. If there is consensus among readers on any subject, it’s on this one. You really don’t want wide-eyed speculation about what the world might look like years in the future — particularly not if it obscures harms that are happening today around bias, misinformation, and job losses due to automation.

“Consider the way some people bloviate about the hypothetical moral harms of colonizing distant galaxies, when we know for a fact that under current physical knowledge it is not something that can happen for millennia in real time,” commenter Heather wrote. “There is certainly marginal benefit in considering such far future actions, but it's irrelevant to daily life.”

Reader James Lanyon put it another way.

“Just because the various fathers and godfathers of AI are talking about the implications of artificial super intelligence doesn't mean we've gotten close to solving for the fact that machine vision applications can be confounded by adversarial attacks a 5-year-old could think up,” he wrote.

Focus on the people building AI systems — and the people affected by its release. AI is still (mostly) not developing itself — people are. You encouraged me to spend time telling those stories, which should help us understand what values these systems are being infused with, and what impact they’re having in the real world.

“If there is one thing I would like to see, it would be you dedicating some articles interviewing different people that wouldn't normally be interviewed regarding the impact AI is having on them, or what they are doing about AI, and promoting individuals who have thought more on certain topics,” one reader wrote. “I think focusing on unique cases would have a profound impact that covering the latest news [or offering] takes cannot achieve.”

Similarly, you want me to pay close attention to the companies doing the building.

“If the same 4 or 5 companies hold the future of AI in their hands, especially the companies that authored the era of surveillance capitalism (especially Google), that bit needs to be discussed thoroughly and without mercy,” commenter Rachel Parker wrote.

Offer strategic takes on products. You told me that you’re not only interested in risk. You want to know who’s likely to win and lose in this world as well, and how various products could affect the landscape.

“I think there is an intuition you have towards tech product strategy, and I would love to hear more of that as well with AI products,” reader Shyam Sandilya wrote.

I really like this idea. One reason it resonates with me: I think critiquing individual products can often generate more insight than high-level news stories or analysis.

Emphasize the tradeoffs involved. A focus on the compromises that platforms have to make in developing policies has, I think, been a hallmark of this newsletter. You encouraged me to bring a similar focus to policy related to AI.

“I think it’s vital with AI to explore and be excited by the potential, and still talk about the potential risks,” one reader wrote. “Be balanced and nuanced about the tradeoffs, and which tradeoffs make sense and which don’t.”

And finally:

Remember that nothing is inevitable. There’s a tendency among AI executives to discuss superhuman AI as if its arrival is guaranteed. In fact, we still have time to build and shape the next generation of tech and tech policy to our liking. You told me to resist narratives that AI can’t be meaningfully regulated, or any variation on the idea that the horses are already out of the barn.

To the extent that we build the AI that boosters are talking about, all of it will be built by individual people and companies making decisions. You told me to keep that as my north star.

I’ll try. Thanks so much for helping me build this roadmap — and if you have any amendments, please let me know.

On the podcast this week: Kevin and I talk about OpenAI’s first big appearance in conference. Plus: a new chance to hear my recent story about Yoel Roth and Twitter from This American Life, and … perhaps a game of this?

Apple | Spotify | Stitcher | Amazon | Google

Governing

- OpenAI CEO Sam Altman called on Congress to adopt AI regulations to help mitigate safety, privacy, and copyright concerns while giving tech companies freedom to continue developing new products. (Ryan Tracy / WSJ)

- Twitter was sued by jailed Saudi Arabian dissident Abdulrahman Al-Sadhan and his sister, an American citizen, for allowing spies to hand over company data to the Saudi government. The case dates back to events that transpired in 2015.(Joseph Menn / The Washington Post)

- Meta content moderation experts discussed the tradeoffs in trying to develop a framework for policing state media while also preserving people’s right to information. (Lindsay Hundley, Yvonne Lee, Olga Belogolova and Sarah Shirazyan / Lawfare)

- The Markets in Crypto Assets regulation (MiCA) was signed by the European Union Council on Tuesday, bringing the first major crypto regulation one step closer to law. The legislation governs tax disclosures, operating licenses, and stablecoin reserves in the EU’s 27 members states. (Jack Schickler and Sandali Handagama / CoinDesk)

- Ireland’s data privacy regulator was found to be too lenient against Big Tech, most of which have European headquarters in the country, when it comes to GDPR compliance. About 75% of the agency’s decisions have been overruled when sent to the European Data Protection Board. (Ciara O'Brien / The Irish Times)

- The gaming community is now in a race with the U.S. military in trying to find top-secret government secrets leaked on platforms like Discord before they’re taken down. (Jessica Donati / WSJ)

- The Vietnamese military is operating an army of fake accounts on Facebook in order to mass-report unflattering news ,and abuse the platform’s moderation policies to stifle dissent. (Danielle Keeton-Olsen / Rest of World)

Industry

- Microsoft researchers published a paper in March arguing that an experimental AI system exhibited signs of human reasoning in what the company is claiming may be a step toward A.G.I. The AI system solved word problems with what appeared to be an innate understanding of the physical world — but skeptics are doubtful. (Cade Metz / The New York Times)

- Zoom is partnering with Anthropic to integrate the startup’s Claude chatbot into its customer support system. (Emma Roth / The Verge)

- TikTok creators are using generative AI to produce elaborate alternate histories that imagine a world without Western imperialism and featuring faux documentary images and narration. (Andrew Deck / Rest of World)

- An interview with Instagram co-founder Kevin Systrom about his AI news app Artifact touches on the startup’s aspirations to treat writers like proper online creators. The company is quickly shipping features that encourage writers to claim their profiles and comment on stories. I’m into it. (Darrell Etherington / TechCrunch)

- Twitter made its first acquisition under Elon Musk’s leadership, scooping up recruiting startup Laskie for a price reportedly in the “tens of millions.” A recruiting startup?? The jokes write themselves. (Kia Kokalitcheva / Axios)

- Google will delete accounts that haven’t been logged into for at least two years, rendering email addresses inaccessible and removing all Calendar, Drive, Docs, Gmail, and Google Photos files. The process will start in December 2023. (Abner Li / 9to5Google)

- Meta spun out CRM startup Kustomer, which it acquired last year for $1 billion, with a 75% reduction in its valuation. (Ingrid Lunden / TechCrunch)

- Apple previewed new accessibility features that include making the iPhone easier to use for people with cognitive disabilities; options for blind users to more easily locate doors, signs and text labels; and the option for users to synthesize their own voices. (Amanda Silberling / TechCrunch)

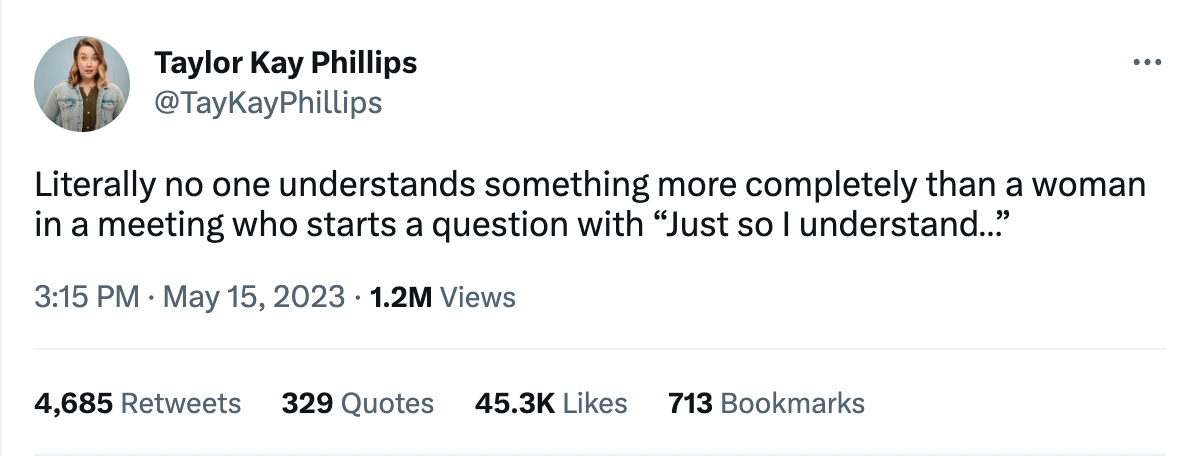

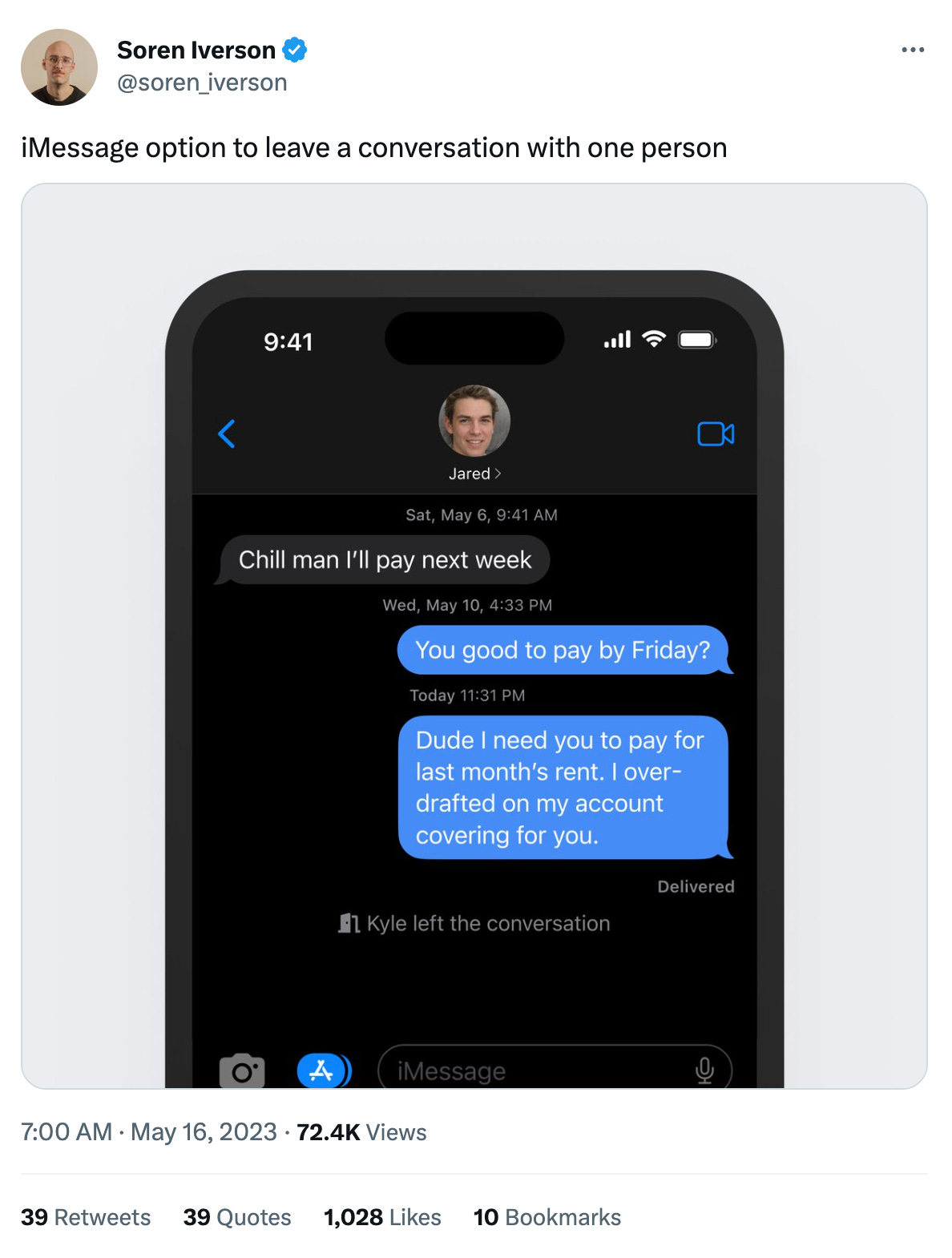

Those good tweets

For more good tweets every day, follow Casey’s Instagram stories.

(Link)

(Link)

(Link)

Talk to us

Send us tips, comments, questions, and examples of good AI coverage: casey@platformer.news and zoe@platformer.news.